WE have seen that AI, like any new technology only perhaps more so, brings with it a whole new raft of moral considerations that may easily seem unsurmountable.

For AI computer systems have no conscience, and so the morality of any decisions they make will reflect the morality of the computer programmers - and that is where the difficulties start.

How can we be sure that the programmers will build in a morality that is benevolent and humane? Rosalind Picard, director of the Affective Computing Group at MIT, puts it succinctly: "The greater the freedom of a machine, the more it will need moral standards."

Political scientist and author of The End of History Francis Fukuyama regards transhumanism as "the world's most dangerous idea" in that it runs the risk of affecting human rights.

His reason is that liberal democracy depends on the fact that all humans share an undefined "Factor X" on which their equal dignity and rights are grounded.

The use of enhancing technologies, he fears, could destroy Factor X. Indeed, I would want to say that Factor X has actually been defined: it is being made in the image of God.

Fukuyama writes: "Nobody knows what technological possibilities will emerge for human self-modification.

"But we can already see the stirrings of Promethean desires in how we prescribe drugs to alter the behaviour and personalities of our children.

"The environmental movement has taught us humility and respect for the integrity of nonhuman nature. We need a similar humility concerning our human nature.

"If we do not develop it soon, we may unwittingly invite the transhumanists to deface humanity with their genetic bulldozers and psychotropic shopping malls."

We have seen that one of the stated goals of transhumanism is not merely to improve but to change human nature - as implied in the very word itself.

For many of us, this raises deep ethical and theological concerns.

However, the moral questions do not first arrive when some of the transhumanists' goals are achieved. Many systems already operating or almost ready to be put into operation raise immediate ethical problems.

For example, autonomous vehicles are the obvious case. They have to be programmed to avoid hitting obstacles and causing damage. But on what moral principles will the choices involved be based, especially in the case of moral dilemmas - should a self-driving car be programmed to avoid a child crossing the road if the consequence is that it unavoidably hits a bus queue of many adults? Is there any possibility of getting any kind of consensus here?

These are real questions, not only for Christians, but for people of every viewpoint. In trying to answer them, we shall inevitably meet the widespread view that morality is subjective and relative and so there is no hope of making progress here.

However, if morality, if our ideas of right and wrong, are purely subjective, we should have to abandon any idea of moral progress (or regress), not only in the history of nations, but in the lifetime of each individual.

We have seen that one of the stated goals of transhumanism is not merely to improve but to change human nature - as implied in the very word itself. For many of us, this raises deep ethical and theological concerns

The very concept of moral progress implies an external moral standard by which not only to measure that a present moral state is different from an earlier one but also to pronounce that it is 'better' than the earlier one.

Without such a standard, how could one say that the moral state of a culture in which cannibalism is regarded as an abhorrent crime is any 'better' than a society in which it is an acceptable culinary practice?

Naturalism denies this. For instance, Yuval Harari asserts: "Hammurabi and the American Founding Fathers alike imagined a reality governed by universal and immutable principles of justice, such as equality or hierarchy.

"Yet the only place where such universal principles exist is in the fertile imagination of Sapiens, and in the myths they invent and tell one another. These principles have no objective validity."

Yet relativists tend to argue that since, according to them, there are no moral absolutes, no objective rights and wrongs, no-one ought to try to impose his moral views on other people.

But in arguing like that, they refute their own theory. The word 'ought' implies a moral duty. They are saying, in effect, that because there are no universal, objective principles, there is a universal moral principle binding on all objectivists, and everyone else, namely that no-one ought to impose his moral views on other people. In so saying, relativism refutes its own basic principle.

Moral subjective relativism is not liveable. When it comes to the practical affairs of daily life, a subjectivist philosopher will vigorously object if his theory is put into action to his disadvantage.

If his bank manager entertains the idea that there is no such thing as objective fairness and tries to cheat the philosopher out of £2,000, the philosopher will certainly not tolerate the manager's subjectivist and 'culturally determined' sense of values.

The fact is, as pointed out by C. S. Lewis, that our everyday behaviour reveals that we believe in a common standard that is outside ourselves.

That is shown by the fact that, from childhood on, we engage in criticising others and excuse ourselves to them: we expect others to accept our moral judgments.

From the perspective of Genesis, this is surely precisely what you would expect if human beings are made in the image of God as moral beings and therefore hard-wired for morality.

Relativists tend to argue that since, according to them, there are no moral absolutes, no objective rights and wrongs, no-one ought to try to impose his moral views on other people. But in arguing like that, they refute their own theory

Oddly enough, AI may be able to support this viewpoint. Think of one of the very successful AI applications in medicine we have mentioned - the accurate diagnosis of a particular disease from learning from a large database of X-rays.

Suppose now that we were to build a huge database of moral decisions made by human beings and apply machine learning to them. Many of those decisions, if not most, would be biased in some way or other, and we would have to build in methods of recognising the bias.

However, as

[ Lianna Brinded puts it in an article for Quartz ]

: "This is easier said than done. Human bias in hiring has been well-documented, with studies showing that even with identical CVs, men are more likely to be called in for an interview, and non-white applicants who 'whiten' their resumes also get more calls.

"But of course, AI is also not immune to biases in hiring either. We know that across industries, women and ethnic minorities are regularly burned by algorithms, from finding a job to getting healthcare. And with the greater adoption of AI and automation, this is only going to get worse."

How then do you teach fairness to a computer or program it to overcome racial or gender bias? It will only be possible if the programmers know what these things are and are capable of presenting them in a form that a machine can process.

If things go wrong because the system amplifies the bias rather than removing it, we cannot blame a conscienceless machine. Only a moral being, the human programmer, can and should be blamed.

Clearly, this issue is central, but it would nevertheless be fascinating to apply AI in this way to a gigantic crowdsourced database of moral choices to see what commonalities arose.

In other words, apply AI to moral decision-making itself as a help to what morality should be programmed into the various kinds of system under development.

Of course, this runs the risk of determining morality in a utilitarian manner by majority vote, which, as history shows, is not always a wise thing to do.

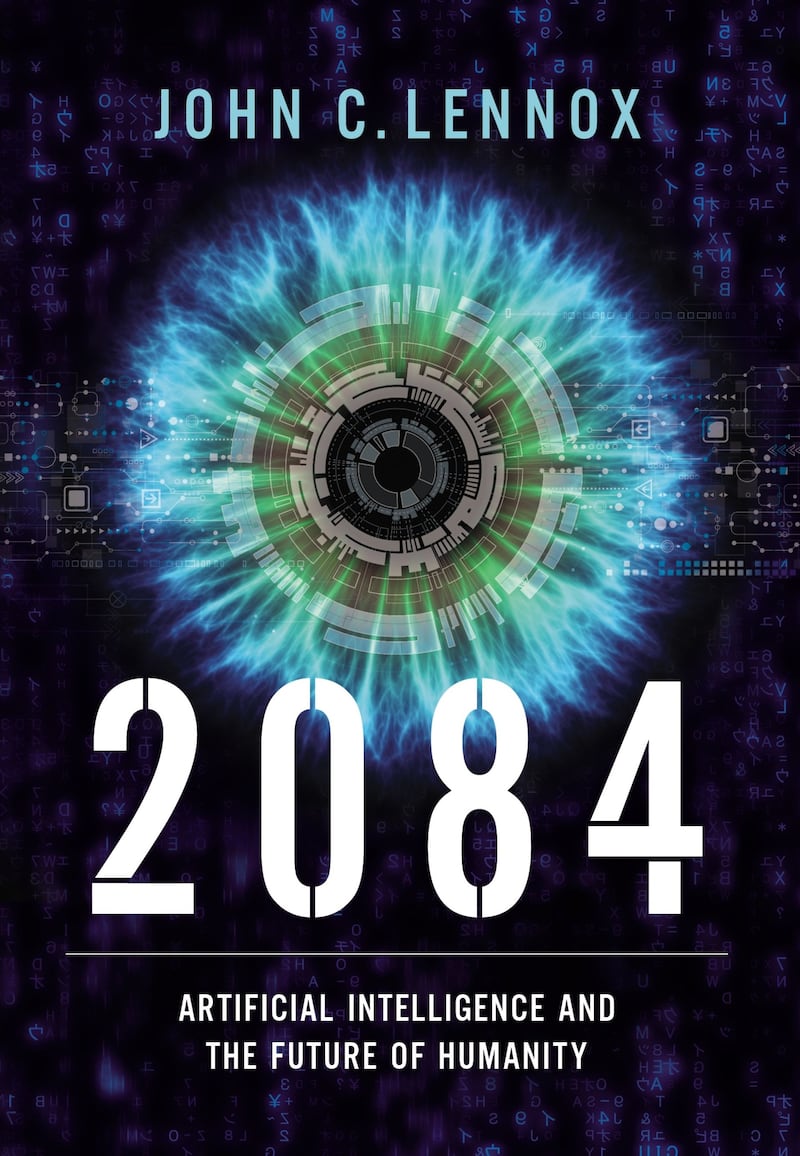

Taken from 2084: Artificial Intelligence and The Future of Humanity by John Lennox. Copyright © 2020 by Zondervan. Used by permission of Zondervan, www.zondervan.com

John C. Lennox (PhD, DPhil, DSc) is Professor of Mathematics in the University of Oxford (Emeritus), Fellow in Mathematics and the Philosophy of Science, and Pastoral Advisor at Green Templeton College, Oxford.

He is author of God's Undertaker: Has Science Buried God? on the interface between science, philosophy, and theology. He lectures extensively in North America and in Eastern and Western Europe on mathematics, the philosophy of science, and the intellectual defence of Christianity, and he has publicly debated New Atheists Richard Dawkins and Christopher Hitchens.

John is married to Sally; they have three grown children and 10 grandchildren and live near Oxford.

There is more about 2084: Artificial Intelligence and The Future of Humanityhere.

Professor Lennox maps out the territory of the subject here and introduces the importance of Genesis in understanding humans here.

1. As a general reference in this area, see David Gooding and John Lennox, Doing What’s Right: Whose System of Ethics Is Good Enough?, book 4 in The Quest for Reality and Significance (Belfast: Myrtlefield, 2018)

2. Rosalind Picard, Affective Computing (Cambridge, MA: MIT Press, 1997), 134

3. See Michael Cook, “Is Transhumanism Really the World’s Most Dangerous Idea?”Mercatornet, July 20 2016; see also Francis Fukuyama, “The World’s Most Dangerous Ideas: Transhumanism”, Foreign Policy 144, no. 1 (September 2004)

4. See Francis Fukuyama, Our Posthuman Future: Consequences of the Biotechnology Revolution (New York: Farrar, Straus and Giroux, 2002), 149–51

5. Francis Fukuyama, “Special Report: Transhumanism”, Foreign Policy, October 23 2009

6. Yuval Noah Harari, Sapiens (New York: HarperCollins, 2015), 108

7. Lianna Brinded, “How to Prevent Human Bias from Infecting AI”, Quartz, March 20 2018