Child victims of sexual abuse could be affected by unintended consequences if the Government rushes through internet regulation without taking a balanced approach, an internet safety charity has said.

The Internet Watch Foundation (IWF) warned against “rushing into knee-jerk regulation which creates perverse incentives or unintended consequences to victims” and urged politicians and policymakers to work with social networks to develop the best possible regulatory framework, rather than simply imposing it on them.

Recommendations have been set out by the charity ahead of the Government’s long-awaited White Paper on dealing with online harms, which is expected by the end of winter.

Culture Secretary Jeremy Wright, who is in Silicon Valley meeting technology industry leaders including Facebook boss Mark Zuckerberg, told the BBC that the period of companies regulating themselves “was coming to an end”.

He said on Thursday: “If we do it effectively, as we seek to do, and we construct a system that will work, then it may be that other countries will look carefully at that model and seek to do something similar.

“So there’s good reason for these companies, be it Facebook or any other, to engage with us.”

IWF chief executive Susie Hargreaves said: “The UK already leads the world at tackling online child sexual abuse images and videos but there is definitely more that can be done, particularly in relation to tackling grooming and livestreaming, and of course regulating harmful content is important.

“My worries, however, are about rushing into knee-jerk regulation which creates perverse incentives or unintended consequences to victims and could undo all the successful work accomplished to date. Ultimately, we must avoid a heavy cost to victims of online sexual abuse.”

She added: “We recommend an outcomes-based approach where the outcomes are clearly defined and the Government should provide clarity over the results it seeks in dealing with any harm.

“There also needs to be a process to monitor this and for any results to be transparently communicated.”

As well as an appropriate legislative framework, the charity said that a well-resourced programme of education is required with long-term investment, as well as flexibility to use whatever technical tools are necessary, most likely in partnership with internet technology giants.

It warned that regulation which was too restrictive could lead to increased suffering for victims and potentially cause businesses to leave the UK.

“Our ability to proactively search for this material means we currently provide hope to victims who know that we are doing what we can to remove the many, many duplicates of images of themselves,” its report said.

It also added that many US companies could use its services “because we are not law enforcement or government”, leading to the swift removal of images.

“We can also move at the speed of the internet keeping pace with the development of technology,” it added.

The Government will also need to provide clarity on the results it seeks in dealing with the harm, the report said.

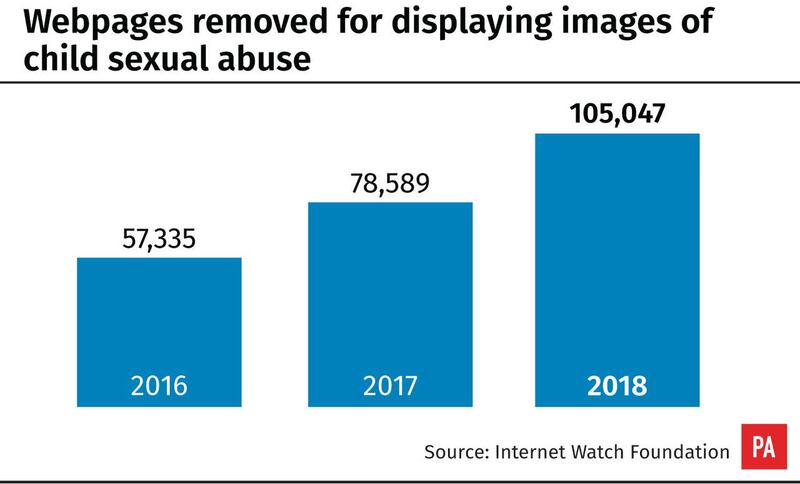

The IWF, which identifies and removes online images and videos of abuse, recently revealed that 105,047 offending webpages were removed in 2018, up by a third on the previous year.